4.5 Least Squares

approximate linear system

1Reading¶

Material related to this page, as well as additional exercises, can be found in LAA 6.5 and VMLS 12.1.

2Learning Objectives¶

By the end of this page, you should know:

- the least squares problem and how to solve it

- how the least squares problem relates to a solving an approximate linear system

3Introduction: Inconsistent Linear Equations¶

Suppose we are presented with an inconsistent set of linear equations . This typically coincides with being a “tall matrix”, i.e., . This corresponds to an overdetermined system of linear equations in unknowns. A typical setup assumes this arises is one of data fitting: we are given feature variables and response variables , and we believe that for measurements and are our model parameters. We will revisit this application in detail later.

The question then becomes, if no exists such that exists, what should we do? A natural idea is to select an that makes the error or residual as small as possible, i.e., to find the that minimizes . Now minimizing the residual or its square gives the same answer, so we may as well minimize

the sum of squares of the residuals.

4The Least Squares Problem¶

4.1Solving by Orthogonal Projection¶

There are many ways of deriving the solution to (LS): you may have seen a vector calculus-based derivation in Math 1410. Here, we will use our new understanding of orthogonal projections to provide an intuitive and elegant geometric derivation.

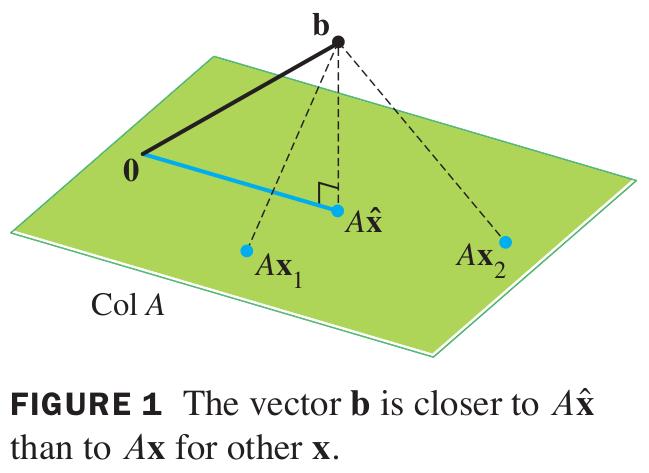

Our starting point is a column interpretation of the least squares objective: let be the columns of : then the least squares (LS) problem is the problem of finding a linear combination of the columns that is closest to the vector , with coefficients specified by :

To prove the above geometrically intuitive fact (see Figure 1), we need to decompose into its orthogonal projection onto Col, which we denote by , and the element in its orthogonal complement Col, which we denote by . Recall and Col.

We then have that

Since Col, so is (why?), and thus we have decomposed into components lying in Col and Col. Using our generalized Pythagorean theorem, it then follows that

The above expression can be made as small as possible be choosing such that , which always has a solution (why?) leaving the residual error , ie, the component of that is orthogonal to Col.

This gives us a nice geometric interpretation of the lest squares solution , but how should we compute it? We now recall from here that ColNull. So, we therefore have that Null. This means that

or, equivalently that

The above equations are the normal equations associated with the lest squares problem specified by and . We have just informally argued that the set of least squares solutions coincide with the set of solutions to the normal equations (NE): this is in fact true, and can be proven (we wont do that here).

Thus, we have reduced solving a least squares problem to our favorite problem, solving a system of linear equations! One question you might have is when do the normal equations (NE) have a unique solution? The answer, perhaps unsurprisingly, is when the columns of are linearly independent, and hence form a basis for Col. The following theorem is a useful summary of our discussion thus fur:

Python break!¶

In the following code, we show how to use np.linalg.lstsq in Python to solve the least squares problem, and also how to obtain the solution by solving a linear system (np.linalg.solve) as illustrated in Example 1. If there is more than one solution to the least squares problem, then the two strategies ( np.linalg.lstsq and np.linalg.solve) might possibly return different solutions because each NumPy function uses a different numerical strategy to obtain .

# Least squares

import numpy as np

def least_squares_linalg(A, b):

print("\nA: \n", A, "\nb: ", b)

print("\nlstsq function\n")

x, residual, rank, sing_val = np.linalg.lstsq(A, b, rcond=None)

# residual = 0 if rank of A < size of x (or) number of rows of A <= size of x

print("Solution (x): \n", x, "\nResidual: ", residual)

def least_squares(A, b):

print("\nsolving a linear system\n")

x = np.linalg.solve(A.T @ A, A.T @ b)

residual = np.linalg.norm(A@x- b)**2

print("Solution (x): \n", x, "\nResidual: ", residual)

A = np.array([[1, -2],

[5, 3]])

b = np.array([8, 1])

least_squares_linalg(A, b)

least_squares(A, b)

A1 = np.array([[1, 1, 0, 0],

[1, 1, 0, 0],

[1, 0, 1 , 0],

[1, 0, 1, 0],

[1, 0, 0, 1],

[1, 0, 0, 1]])

b1 = np.array([-3, -1, 0, 2, 5, 1])

# Notice the difference in both the solutions

least_squares_linalg(A1, b1)

least_squares(A1, b1)

A2 = np.array([[4, 0], [0, 2],[1, 1]])

b2 = np.array([2, 0, 11])

least_squares_linalg(A2, b2)

least_squares(A2, b2)

A:

[[ 1 -2]

[ 5 3]]

b: [8 1]

lstsq function

Solution (x):

[ 2. -3.]

Residual: []

solving a linear system

Solution (x):

[ 2. -3.]

Residual: 0.0

A:

[[1 1 0 0]

[1 1 0 0]

[1 0 1 0]

[1 0 1 0]

[1 0 0 1]

[1 0 0 1]]

b: [-3 -1 0 2 5 1]

lstsq function

Solution (x):

[ 0.5 -2.5 0.5 2.5]

Residual: []

solving a linear system

Solution (x):

[-6. 4. 7. 9.]

Residual: 11.999999999999998

A:

[[4 0]

[0 2]

[1 1]]

b: [ 2 0 11]

lstsq function

Solution (x):

[1. 2.]

Residual: [84.]

solving a linear system

Solution (x):

[1. 2.]

Residual: 84.0