4.4 Orthogonal Projections and Orthogonal Subspaces

Splitting vectors into orthgonal pieces

1Reading¶

Material related to this page, as well as additional exercises, can be found in ALA 4.4.

2Learning Objectives¶

By the end of this page, you should know:

- orthogonal projection of vectors

- orthogonal subspaces

- relationship between dimensions of orthogonal subspaces

- orthogonality of the fundamental matrix subspaces and how they relate to a linear system

3Introduction¶

We extend the idea of orthogonality between two vectors to orthogonality between subspaces. Our starting point is the idea of an orthogonal projection of a vector onto a subspace.

4Orthogonal Projection¶

Let be a (real) inner product space, and be a finite dimensional subspace of . The results we resent are fairly general, but it may be helpful to think of as a subspace of .

Note from Definition 2 that this means can be decomposed as the sum of its orthogonal projection and the perpendicular vector that is orthogonal to , i.e., .

When we have access to an orthonormal basis for , constructing the orthogonal projection of onto becomes quite simple as given below.

We will see shortly that orthogonal projections of a vector onto a subspace is exactly what solving a least-squares problem does, and lies at the heart of machine learning and data science.

However, before that, we will explore the idea of orthogonal subspaces, and see that they provide a deep and elegant connection between the four fundamental subspaces of a matrix and whether a linear system has a solution.

5Orthogonal Subspaces¶

An important geometric notion present in Example 2 is the Orthogonal Complement that is defined below.

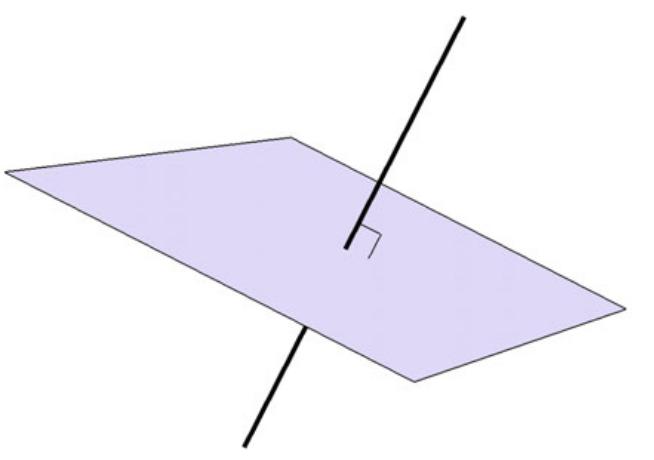

The orthogonal complement to a line is given in the figure below, which is discussed in Example 2.

Another direct consequence of Figure 3 is that a subspace and its orthogonal complement have complementary dimensions.

In Example 3, is plane, with dim. Hence, we can conclude that dim, i.e., is a line, which is indeed what we saw previously.

6Orthogonality of the Fundamental Matrix Subspace¶

We previously introduced the four fundamental subspaces associated with an matrix : the column, null, row, and left null spaces. We also saw that the null and row spaces are subspaces with complementary dimensions in , and the left null space and column space are subspaces with complementary dimensions in . Moreover, these pairs are orthogonal complements of each other with respect to the standard dot product.

Let be an matrix. Then,

We will not go through the proof (although it is not hard), but instead focus on a very important practical consequence.

A linear system has a solution if and only if is orthogonal to LNull.

We know that if and only if Col since is a linear combination of the columns of .

From Theorem 2 we know that , or equivalently, that .

So, this means that Null, or equivalently, that for all such that . Just to get a sense of why this is perfectly reasonable, let’s assume that we can find a Null for which . This implies that we have an inconsistent set of equations, which can be seen as follows.

Let be any solution to , and take the inner product of both sides with :

But since LNull, for any , meaning we must have . However, we picked a special such that . There is a contradiction! This might have been because of a mistake in our reasoning: either has no solution, or .

Another way of thinking about (10): if , which means we can add the equations in the entries of together, weighted by the elements of , so that they cancel to zero. So, the only way for to be compatible is if the same weighted combination of the RHS, , also equals 0.