2.3 Span and Linear Independence The building blocks of subspaces

Dept. of Electrical and Systems Engineering

University of Pennsylvania

1 Reading ¶ Material related to this page, as well as additional exercises, can be found in ALA Ch. 2.3 and LAA 4.1, 4.3.

2 Learning Objectives ¶ By the end of this page, you should know:

how to form linear combinations of vectors a span of a set of vectors what it means for a collection of vectors to be linearly dependent and independent how to check for linear dependence and independence 3 Span and Linear Independence ¶ 3.1 Building Subspaces ¶ One natural way of constructing a subspace is to start with some building blocks v 1 , … , v k ∈ V \vv v_1, \dots, \vv v_k\in V v 1 , … , v k ∈ V V V V linear combinations of them.

Definition 1 (Linear Combination and Span)

Let v 1 , … , v k \vv v_1 , \ldots, \vv v_k v 1 , … , v k V V V

c 1 v 1 + c 2 v 2 + … + c k v k = ∑ i = 1 k c i v i , c_1 \vv v_1 + c_2 \vv v_2 + \ldots + c_k \vv v_k = \sum_{i=1}^k c_i \vv v_i, c 1 v 1 + c 2 v 2 + … + c k v k = i = 1 ∑ k c i v i , where the coefficients c 1 , c 2 , … , c k c_1 , c_2 ,\ldots , c_k c 1 , c 2 , … , c k linear combination of the elements v 1 , … , v k \vv v_1 , \ldots, \vv v_k v 1 , … , v k span is the subset

W = span { v 1 , … , v k } ⊂ V W =\textrm{span}\{\vv v_1 , \ldots, \vv v_k\} \subset V W = span { v 1 , … , v k } ⊂ V consisting of all possible linear combinations with scalars c 1 , c 2 , … , c k ∈ R c_1 , c_2 ,\ldots , c_k \in \mathbb{R} c 1 , c 2 , … , c k ∈ R

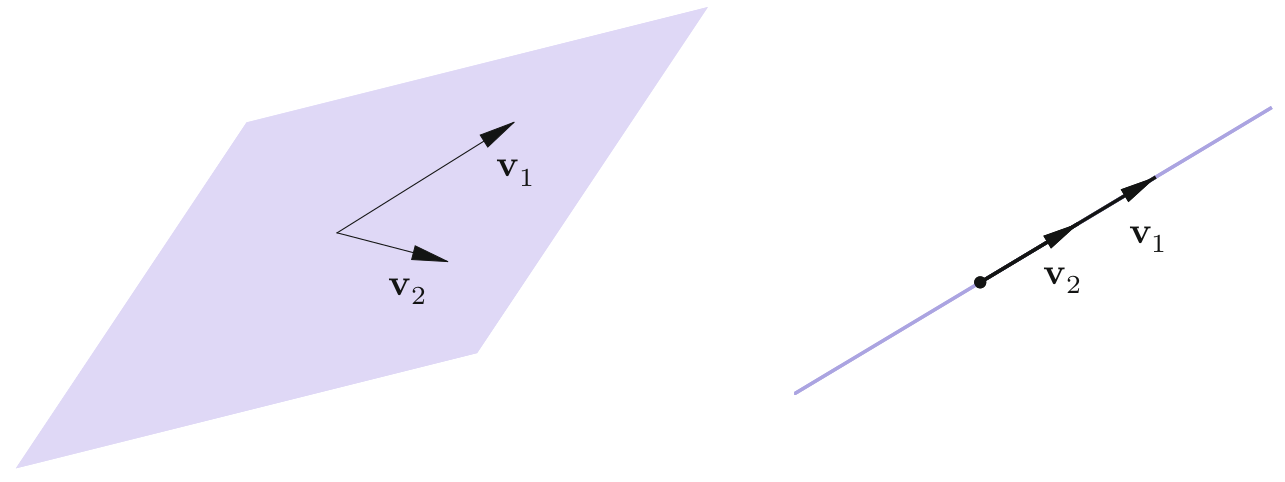

A plane and a line spanned by two vectors v 1 , v 2 \vv v_1, \vv v_2 v 1 , v 2 Figure 1 c ∈ R c \in \mathbb{R} c ∈ R v 1 = c v 2 \vv v_1 = c \vv v_2 v 1 = c v 2

The span W = span { v 1 , … , v k } W =\textrm{span}\{\vv v_1 , \ldots, \vv v_k\} W = span { v 1 , … , v k } v 1 , … , v k ∈ V \vv v_1 , \ldots, \vv v_k \in V v 1 , … , v k ∈ V subspace V V V

This key fact is not hard to check using the properties of vector addition and scalar multiplication, but it is a surprisingly powerful tool for generating useful subspaces, and for checking if a vector v ∈ V \vv v \in V v ∈ V W W W

Example 1 (Spans of 2 Vectors in

R 3 \mathbb{R}^3 R 3 )

Let v 1 , v 2 ∈ R 3 \vv v_1, \vv v_2 \in \mathbb{R}^3 v 1 , v 2 ∈ R 3

If v 1 \vv v_1 v 1 v 2 \vv v_2 v 2 c v 1 + d v 2 c \vv v_1 + d \vv v_2 c v 1 + d v 2 If v 1 \vv v_1 v 1 v 2 \vv v_2 v 2 c v 1 c \vv v_1 c v 1 See Figure 1

Exercise 1 (Checking Membership of a Vector in a Span of Vectors)

Let W = span { v 1 , v 2 } ⊂ R 3 W = \textrm{span}\{\vv v_1, \vv v_2\} \subset \mathbb{R}^3 W = span { v 1 , v 2 } ⊂ R 3

v 1 = [ 1 2 1 ] , v 2 = [ − 2 − 3 5 ] . \vv v_1 = \bm 1 \\ 2 \\ 1 \em, \ \vv v_2 = \bm -2 \\ -3 \\ 5 \em. v 1 = ⎣ ⎡ 1 2 1 ⎦ ⎤ , v 2 = ⎣ ⎡ − 2 − 3 5 ⎦ ⎤ . Is the vector v = [ 1 3 8 ] \vv v = \bm 1 \\ 3 \\ 8 \em v = ⎣ ⎡ 1 3 8 ⎦ ⎤ W W W v ~ = [ 1 0 0 ] \vv{\tilde v} = \bm 1\\0\\0 \em v ~ = ⎣ ⎡ 1 0 0 ⎦ ⎤

If v ∈ W \vv v \in W v ∈ W c 1 , c 2 ∈ R c_1, c_2 \in \mathbb R c 1 , c 2 ∈ R v = c 1 v 1 + c 1 v 2 \vv v = c_1 \vv{v_1} + c_1 \vv{v_2} v = c 1 v 1 + c 1 v 2

[ 0 1 − 1 ] = c 1 [ 1 − 2 1 ] + c 2 [ 2 − 3 1 ] = [ c 1 + 2 c 2 − 2 c 1 − 3 c 2 c 1 + c 2 ] \begin{align*}

\bm 0&1&-1 \em = c_1 \bm 1\\-2\\1 \em + c_2\bm 2\\-3\\1\em = \bm c_1 + 2c_2\\-2c_1 - 3c_2\\c_1+c_2 \em

\end{align*} [ 0 1 − 1 ] = c 1 ⎣ ⎡ 1 − 2 1 ⎦ ⎤ + c 2 ⎣ ⎡ 2 − 3 1 ⎦ ⎤ = ⎣ ⎡ c 1 + 2 c 2 − 2 c 1 − 3 c 2 c 1 + c 2 ⎦ ⎤ That is, c 1 c_1 c 1 c 2 c_2 c 2

c 1 + 2 c 2 = 0 − 2 c 1 − 3 c 2 = 1 c 1 + c 2 = − 1 \begin{align*}

c_1 + 2c_2 &= 0\\

-2c_1 - 3c_2 &= 1\\

c_1 + c_2 &= -1

\end{align*} c 1 + 2 c 2 − 2 c 1 − 3 c 2 c 1 + c 2 = 0 = 1 = − 1 which we solve using Gaussian Elimination to get c 1 = − 2 , c 2 = 1 c_1 = -2, c_2 = 1 c 1 = − 2 , c 2 = 1 v = − 2 v 1 + v 2 ∈ W \vv v = -2 \vv{v_1} + \vv{v_2} \in W v = − 2 v 1 + v 2 ∈ W v ~ = [ 1 0 0 ] \vv{\tilde v} = \bm 1\\0\\0 \em v ~ = ⎣ ⎡ 1 0 0 ⎦ ⎤ W W W v ~ ∉ W \vv{\tilde v} \not\in W v ~ ∈ W

Example 2 (Span in a Function Space)

Let V = F ( R ) V = \mathcal F(\mathbb R) V = F ( R ) f ( x ) f(x) f ( x ) R \mathbb R R f 1 ( x ) = 1 f_1(x) = 1 f 1 ( x ) = 1 f 2 ( x ) = x f_2(x) = x f 2 ( x ) = x f 3 ( x ) = x 2 f_3(x) = x^2 f 3 ( x ) = x 2

f ( x ) = c 1 f 1 ( x ) + c 2 f 2 ( x ) + c 3 f 3 ( x ) = c 1 + c 2 x + c 3 x 2 \begin{align*}

f(x) = c_1f_1(x) + c_2f_2(x) + c_3f_3(x) = c_1 + c_2x + c_3x^2

\end{align*} f ( x ) = c 1 f 1 ( x ) + c 2 f 2 ( x ) + c 3 f 3 ( x ) = c 1 + c 2 x + c 3 x 2 where c 1 , c 2 , c 3 ∈ R c_1, c_2, c_3 \in \mathbb R c 1 , c 2 , c 3 ∈ R span { 1 , x , x 2 } = P ( 2 ) \text{span} \{1, x, x^2\} = P^{(2)} span { 1 , x , x 2 } = P ( 2 )

3.2 Linear Independence and Dependence ¶ Linear dependence captures a notion of “redundancy” in a collection of vectors.

Definition 2 (Linear Dependency)

The vectors v 1 , … , v k ∈ V \vv v_1 , \ldots , \vv v_k \in V v 1 , … , v k ∈ V linearly dependent if there exist scalars c 1 , … , c k c_1 , \ldots , c_k c 1 , … , c k not all zero , such that

c 1 v 1 + … + c k v k = 0. c_1 \vv v_1 + \ldots + c_k \vv v_k = \vv 0. c 1 v 1 + … + c k v k = 0 . Elements that are not linearly dependent are called linearly independent .

The condition (7) v i \vv v_i v i v 1 , … , v k \vv v_1 , \ldots , \vv v_k v 1 , … , v k

For any vector v = [ v 1 v 2 v 3 ] ∈ R 3 \vv v = \bm v_1 \\ v_2 \\ v_3 \em \in \mathbb{R}^3 v = ⎣ ⎡ v 1 v 2 v 3 ⎦ ⎤ ∈ R 3 { e 1 , e 2 , e 3 , v } \{\vv e_1, \vv e_2, \vv e_3, \vv v\} { e 1 , e 2 , e 3 , v }

v = v 1 [ 1 0 0 ] + v 2 [ 0 1 0 ] + v 3 [ 0 0 1 ] = v 1 e 1 + v 2 e 2 + v 3 e 3 . \vv v = v_1 \bm 1 \\ 0 \\ 0\em + v_2 \bm 0 \\ 1 \\ 0\em + v_3 \bm 0 \\ 0 \\ 1\em = v_1 \vv e_1 + v_2 \vv e_2 + v_3 \vv e_3. v = v 1 ⎣ ⎡ 1 0 0 ⎦ ⎤ + v 2 ⎣ ⎡ 0 1 0 ⎦ ⎤ + v 3 ⎣ ⎡ 0 0 1 ⎦ ⎤ = v 1 e 1 + v 2 e 2 + v 3 e 3 . The vectors

v 1 = [ 1 2 1 ] , v 2 = [ 0 3 1 ] , \vv v_1 = \bm 1 \\ 2 \\ 1\em,

\vv v_2 = \bm 0 \\ 3 \\ 1\em, v 1 = ⎣ ⎡ 1 2 1 ⎦ ⎤ , v 2 = ⎣ ⎡ 0 3 1 ⎦ ⎤ , are linearly independent. To see this, suppose that

c 1 v 1 + c 2 v 2 = [ c 1 2 c 1 + 3 c 2 − c 1 + c 2 ] = [ 0 0 0 ] .

c_1 \vv v_1 + c_2 \vv v_2 = \bm c_1 \\ 2c_1 + 3c_2 \\ -c_1 + c_2 \em = \bm 0 \\ 0 \\ 0 \em. c 1 v 1 + c 2 v 2 = ⎣ ⎡ c 1 2 c 1 + 3 c 2 − c 1 + c 2 ⎦ ⎤ = ⎣ ⎡ 0 0 0 ⎦ ⎤ . because the only way this can happen is if c 1 = 0 c_1 = 0 c 1 = 0 c 2 = 0 c_2 = 0 c 2 = 0

The polynomials p 1 ( x ) = x − 2 p_1(x) = x - 2 p 1 ( x ) = x − 2 p 2 ( x ) = x 2 − 5 x + 4 p_2(x) = x^2 - 5x + 4 p 2 ( x ) = x 2 − 5 x + 4 p 3 ( x ) = 3 x 2 − 4 x p_3(x) = 3x^2 - 4x p 3 ( x ) = 3 x 2 − 4 x p 4 ( x ) = x 2 − 1 p_4(x) = x^2 - 1 p 4 ( x ) = x 2 − 1

p 1 ( x ) + p 2 ( x ) − p 3 ( x ) + 2 p 4 ( x ) = 0 for all x \begin{align*}

p_1(x) + p_2(x) - p_3(x) + 2p_4(x) = 0 \quad\text{for all $x$}

\end{align*} p 1 ( x ) + p 2 ( x ) − p 3 ( x ) + 2 p 4 ( x ) = 0 for all x On the other hand, the three polynomials p 1 ( x ) , p 2 ( x ) , p 3 ( x ) p_1(x), p_2(x), p_3(x) p 1 ( x ) , p 2 ( x ) , p 3 ( x )

c 1 p 1 ( x ) + c 2 p 2 ( c ) + c 3 p 3 ( x ) = ( c 2 + 3 c 3 ) x 2 + ( c 1 − 5 c 2 + 4 c 3 ) x − 2 c 1 + 4 c 2 = 0 for all x \begin{align*}

c_1p_1(x) + c_2p_2(c) + c_3p_3(x) = (c_2 + 3c_3)x^2 + (c_1 - 5c_2 + 4c_3)x - 2c_1 + 4c_2 = 0 \quad\text{for all $x$}

\end{align*} c 1 p 1 ( x ) + c 2 p 2 ( c ) + c 3 p 3 ( x ) = ( c 2 + 3 c 3 ) x 2 + ( c 1 − 5 c 2 + 4 c 3 ) x − 2 c 1 + 4 c 2 = 0 for all x This is true if c 2 + 3 c 3 = 0 , c 1 − 5 c 2 − 4 c 3 = 0 , − 2 c 1 + 4 c 2 = 0 c_2 + 3c_3 = 0, c_1 - 5c_2 - 4c_3 = 0, -2c_1 + 4c_2 = 0 c 2 + 3 c 3 = 0 , c 1 − 5 c 2 − 4 c 3 = 0 , − 2 c 1 + 4 c 2 = 0 c 1 = c 2 = c 3 = 0 c_1 = c_2 = c_3 = 0 c 1 = c 2 = c 3 = 0

4 Checking Linear Independence in R n \mathbb{R}^n R n ¶ Given the task of checking whether v 1 , … , v k ∈ R n \vv v_1 , \ldots , \vv v_k \in \mathbb{R}^n v 1 , … , v k ∈ R n n × k n \times k n × k A = [ v 1 … v k ] A = \bm \vv v_1 & \ldots & \vv v_k \em A = [ v 1 … v k ] v i \vv v_i v i (7)

A c = c 1 v 1 + … + c k v k , where c = [ c 1 c 2 ⋮ c k ] , A \vv c = c_1 \vv v_1 + \ldots + c_k \vv v_k, \ \textrm{where} \ \vv c = \bm c_1 \\ c_2 \\ \vdots \\ c_k \em, A c = c 1 v 1 + … + c k v k , where c = ⎣ ⎡ c 1 c 2 ⋮ c k ⎦ ⎤ , that is, we write any linear combination of the vectors v 1 , … , v k \vv v_1, \dots, \vv v_k v 1 , … , v k c 1 , … , c k c_1, \dots, c_k c 1 , … , c k

Let v 1 , … , v k ∈ R n \vv v_1 , \ldots , \vv v_k \in \mathbb{R}^n v 1 , … , v k ∈ R n A = [ v 1 … v k ] ∈ R n × k A = \bm \vv v_1 & \ldots & \vv v_k \em \in \mathbb{R}^{n \times k} A = [ v 1 … v k ] ∈ R n × k

The v 1 , … , v k ∈ R n \vv v_1 , \ldots , \vv v_k \in \mathbb{R}^n v 1 , … , v k ∈ R n c = 0 \vv c = \vv 0 c = 0 A c = 0 A \vv c = 0 A c = 0 The vectors are linearly independent if and only if the only solution to the system A c = 0 A \vv c = 0 A c = 0 c = 0 \vv c = \vv 0 c = 0 A vector b \vv b b v 1 , … , v k \vv v_1 , \ldots , \vv v_k v 1 , … , v k A c = b A \vv c = \vv b A c = b We prove the first statement, leaving the other two as exercises for you. The condition that v 1 , … , v k \vv v_1 , \ldots , \vv v_k v 1 , … , v k

c = [ c 1 c 2 ⋮ c k ] ≠ 0 such that A c = c 1 v 1 + … + c k v k = 0. \vv c = \bm c_1 \\ c_2 \\ \vdots \\ c_k \em \neq \vv 0 \ \textrm{such that} \

A \vv c = c_1 \vv v_1 + \ldots + c_k \vv v_k = \vv 0. c = ⎣ ⎡ c 1 c 2 ⋮ c k ⎦ ⎤ = 0 such that A c = c 1 v 1 + … + c k v k = 0 . Therefore, linear dependence requires the existence of a nontrivial solution to the linear system A c = 0 A \vv c = \vv 0 A c = 0

Observations from

Theorem 1 Any collection of k > n k > n k > n R n \mathbb{R}^n R n free variable A collection of k k k R n \mathbb{R}^n R n A c = 0 A \vv c = \vv 0 A c = 0 rank A A A k k k k ≤ n k \leq n k ≤ n A collection of k k k R n \mathbb{R}^n R n n × k n \times k n × k n n n k ≥ n k \geq n k ≥ n