1 Reading ¶ Material related to this page, as well as additional exercises, can be found in VMLS 3.2.

2 Learning Objectives ¶ By the end of this page, you should know:

the Euclidean distance between two vectors the properties of a general distance function 3 The Euclidean Distance ¶ A distance function, or metric, describes how far apart 2 points are.

A familiar starting point for our study of distances will be the Euclidean distance, which is closely related to the Euclidean norm on R n \mathbb R^n R n

Definition 1 (The Euclidean Distance)

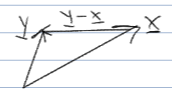

For vectors u , v ∈ R n \vv u, \vv v \in \mathbb{R}^n u , v ∈ R n u − v \vv u - \vv v u − v

dist ( u , v ) = ∥ u − v ∥ = ⟨ u − v , u − v ⟩ \begin{align*}

\text{dist}(\vv u, \vv v) = \| \vv u - \vv v\| = \sqrt{\langle \vv u - \vv v, \vv u - \vv v \rangle}

\end{align*} dist ( u , v ) = ∥ u − v ∥ = ⟨ u − v , u − v ⟩ Note that this is measuring the length of the arrow drawn from point x \vv x x y \vv y y

3.1 Python break! ¶ We use the np.linalg.norm function in Python to compute the Eucledian distance between two vectors by computing the norm of the difference between the vectors.

# Distance between vectors

import numpy as np

v1 = np.array([1, 2])

v2 = np.array([3, 4])

euc_dist = np.linalg.norm(v1 - v2)

print("Eucledian distance: ", euc_dist)Eucledian distance: 2.8284271247461903

4 General Distances ¶ In this course, we will only work with the Euclidean distance. However, given any vector space with a general norm (i.e., R n \mathbb{R}^n R n

Definition 2 (General Distances)

For a set S S S d : S × S → R d : S \times S \to \mathbb R d : S × S → R

Symmetry. For all x , y ∈ S x, y \in S x , y ∈ S d ( x , y ) = d ( y , x ) \begin{align*}

d(x, y) = d(y, x)

\end{align*} d ( x , y ) = d ( y , x ) Positivity. For all x , y ∈ S x, y \in S x , y ∈ S d ( x , y ) ≥ 0 \begin{align*}

d(x, y) \geq 0

\end{align*} d ( x , y ) ≥ 0 and d ( x , y ) = 0 d(x, y) = 0 d ( x , y ) = 0 x = y x = y x = y

Triangular Inequality. For all x , y , z ∈ S x, y, z \in S x , y , z ∈ S d ( x , z ) ≤ d ( x , y ) + d ( y , z ) \begin{align*}

d(x, z) \leq d(x, y) + d(y, z)

\end{align*} d ( x , z ) ≤ d ( x , y ) + d ( y , z ) Try to convince yourself why the Euclidean distance

When the distance ∥ x − y ∥ \| \vv x - \vv y \| ∥ x − y ∥ x , y ∈ V \vv x, \vv y \in V x , y ∈ V ∥ x − y ∥ \| \vv x - \vv y \| ∥ x − y ∥

Note that one vector space can admit many distance functions. From here on, unless otherwise mentioned, we will only be considering the Euclidean distance

Example 1 (Matrix Norms and their Induced Distances)

Let M ∈ R n × n M \in \mathbb{R}^{n \times n} M ∈ R n × n x T M x > 0 x^T M x > 0 x T M x > 0 x ∈ R n x\in \mathbb{R}^n x ∈ R n positive definite ; some equivalent definitions of positive definite matrices are symmetric matrices which may be decomposed as A T A A^TA A T A A A A strictly positive eigenvalues. A familiar positive definite matrix is the identity matrix, I n I_n I n

Then, M M M inner product ⟨ u , v ⟩ M = u T M v \langle \vv u, \vv v \rangle_M = \vv u^T M \vv v ⟨ u , v ⟩ M = u T M v M M M weighted dot product ⟨ u , v ⟩ M \langle \vv u, \vv v \rangle_M ⟨ u , v ⟩ M

The inner product in turn induces a norm ∥ v ∥ M = ⟨ v , v ⟩ M = v T M v \|\vv v\|_M = \sqrt{\langle \vv v, \vv v\rangle}_M = \sqrt{\vv v^T M \vv v} ∥ v ∥ M = ⟨ v , v ⟩ M = v T M v

TODO: Probably want to move this to the norms lesson

Example 2 (Distances on a Connected Graph)

In this example, we’ll demonstrate how the definition of a distance function shortest walk distance on a connected undirected graph .

An undirected graph consists of a set of vertices and edges which connect 2 vertices. Often times, undirected graphs are drawn as follows: the vertices are dots, and edges are lines connecting 2 dots. So we can represent an example of an undirected graph with the image below. (For our purposes, we will assume that each pair of vertices can have at most 1 edge connecting them, and that no vertex has an edge to itself.)

A walk in an undirected graph is a sequence of vertices v 1 , v 2 , . . . , v k − 1 , v k v_1, v_2, ..., v_{k - 1}, v_k v 1 , v 2 , ... , v k − 1 , v k length . In the image, for example, 3 → 5 → 6 → 10 3 \to 5\to 6\to 10 3 → 5 → 6 → 10

We say an undirected graph is connected if there is at least one walk between every pair of vertices. The above graph is connected.

If a graph is connected, then we can define the shortest walk distance as follows. For vertices u , v u, v u , v

dist ( u , v ) = length of shortest walk starting at u and ending at v \begin{align*}

\text{dist}(u, v) = \text{length of shortest walk starting at $u$ and ending at $v$}

\end{align*} dist ( u , v ) = length of shortest walk starting at u and ending at v We will verify that the shortest walk distance indeed satisfies the three axioms of a distance function.

Symmetry. For any two vertices u , v u, v u , v P = u → . . . → v P = u \to ... \to v P = u → ... → v l l l u u u v v v P P P v v v u u u l l l d ( v , u ) ≤ l = d ( u , v ) d(v, u) \leq l = d(u, v) d ( v , u ) ≤ l = d ( u , v )

Next, let Q = v → . . . → u Q = v\to ...\to u Q = v → ... → u v v v u u u l ′ l' l ′ Q Q Q u u u v v v l ′ l' l ′ d ( u , v ) ≤ l ′ = d ( v , u ) d(u, v) \leq l' = d(v, u) d ( u , v ) ≤ l ′ = d ( v , u )

Taken together, these two inequalities imply that d ( u , v ) = d ( v , u ) d(u, v) = d(v, u) d ( u , v ) = d ( v , u )

Positivity. For vertices u ≠ v u \neq v u = v u u u v v v d ( u , v ) > 0 d(u, v) > 0 d ( u , v ) > 0 u ≠ v u \neq v u = v d ( v , v ) = 0 d(v, v) = 0 d ( v , v ) = 0 P = v P = v P = v

Triangular Inequality. For vertices u , v , w u, v, w u , v , w

d ( u , w ) ≤ d ( u , v ) + d ( v , w ) \begin{align*}

d(u, w) \leq d(u, v) + d(v, w)

\end{align*} d ( u , w ) ≤ d ( u , v ) + d ( v , w ) Note that if P u v P_{uv} P uv d ( u , v ) d(u, v) d ( u , v ) u u u v v v P v w P_{vw} P v w d ( v , w ) d(v, w) d ( v , w ) v v v w w w P u v → P v w P_{uv} \to P_{vw} P uv → P v w u u u w w w d ( u , v ) + d ( v , w ) d(u, v) + d(v, w) d ( u , v ) + d ( v , w ) u u u w w w d ( u , w ) ≤ d ( u , v ) + d ( v , w ) d(u, w) \leq d(u, v) + d(v, w) d ( u , w ) ≤ d ( u , v ) + d ( v , w )