7.1 Complex Eigenvalues and Linear Dynamical Systems

Euler breaks down complex exponentials

0.1Reading¶

Material related to this page, as well as additional exercises, can be found in ALA 10.1 and 10.3.

0.2Learning Objectives¶

By the end of this page, you should know:

- how to use Euler’s formula,

- how to write real solutions to linear dynamical systems that have complex eigenvalues.

1Solving Linear ODEs with Complex Eigenvalues¶

In the previous section on linear ODEs, we learned how to find the solutions when the coefficient matrix was diagonalizable. We also saw some examples when was diagonalizable and had all real eigenvalues. It turns out that the same procedure generalizes when is diagonalizable, but has complex eigenvalues. On this page, we’ll see how this can be done.

Let’s apply our solution method for diagonalizable to with . We are given that has eigenvalue/eigenvector pairs:

Even though these eigenvalues/vectors have complex entries, we can still use our solution method for diagonalizable ! We write the solution to as

i.e., is a linear combination of the two solutions

and then solve for and to ensure compatibility with the initial condition . In general and will also be complex numbers, and will take complex values. This is mathematically correct, and indeed one can study dynamical systems evolving over complex numbers. However, we will see that with a little massaging, we can rewrite this solution in a more useful form!

1.1Rewriting the Solution to a Linear ODE¶

In this class, and in most engineering applications, we are interested in real solutions to . If we know want real solutions, it might make sense to try to find different “base” solutions than and that still span all possible solutions to . Our key tool for accomplishing this is Euler’s formula:

We apply Euler’s formula to (BASE), and obtain (after simplifying):

We’ve made some progress, in that and are now in the “standard” complex number form , and that , i.e., and are complex conjugates. We use this observation strategically to define two new “base” solutions:

We note that since and are linear combinations of and , they are valid solutions to . Furthermore, since and are linearly independent (i.e., for all if and only if ), they form a basis for the solution set to . Therefore, we can rewrite (SOL) as

and then solve for and using . If , i.e., if the initial condition is real, then and will be too. For example, suppose , with . Then:

and

i.e., the solution corresponds to the initial condition being rotated in a counterclockwise direction at a frequency of 1 rad/s.

Python break!¶

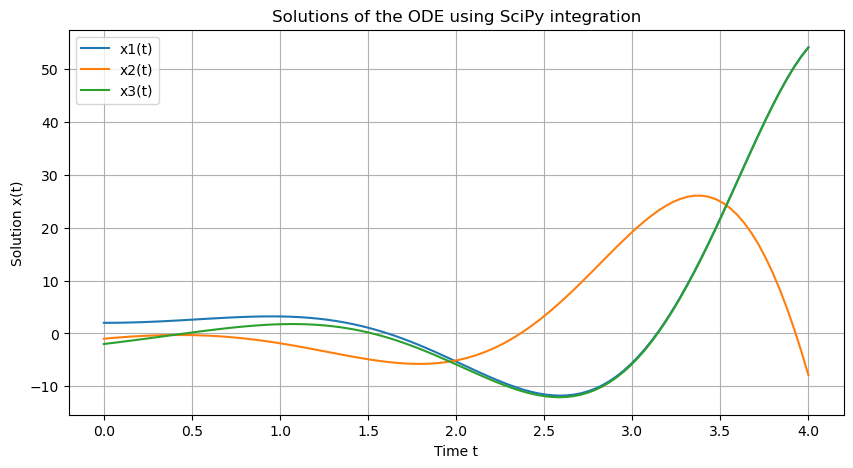

In the below code, we illustrate how to numerically integrate an ordinary differential equation using SciPy’s function in Python. We then compare it with the manual solution we obtained in Example 1.

import numpy as np

from scipy.integrate import solve_ivp

import matplotlib.pyplot as plt

A = np.array([[1, 2, 0],

[0, 1, -2],

[2, 2, -1]])

def system(t, x):

return A @ x

x0 = np.array([2, -1, -2])

# Define time points for the solution

t_span = (0, 4) # Time from 0 to 10

t_eval = np.linspace(t_span[0], t_span[1], 100) # Time points where the solution is evaluated

# Solve the ODE system

sol = solve_ivp(system, t_span, x0, t_eval=t_eval)

# Extract the solution

times = sol.t

solutions = sol.y

# Plot the solutions

plt.figure(figsize=(10, 5))

plt.plot(times, solutions[0, :], label='x1(t)')

plt.plot(times, solutions[1, :], label='x2(t)')

plt.plot(times, solutions[2, :], label='x3(t)')

plt.xlabel('Time t')

plt.ylabel('Solution x(t)')

plt.title('Solutions of the ODE using SciPy integration')

plt.legend()

plt.grid()

plt.show()

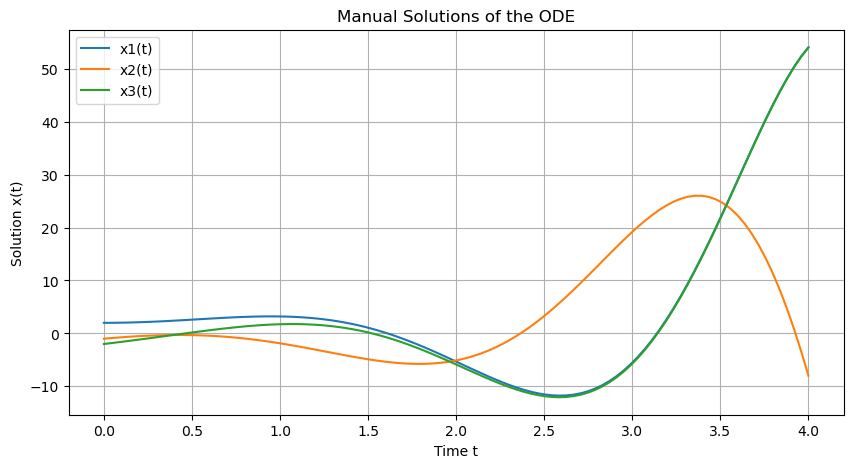

## Manual solution from example 1

solutions_manual = np.vstack((2*np.exp(-times)+np.exp(times)*np.sin(2*times),

-2*np.exp(-times)+np.exp(times)*np.cos(2*times),

-2*np.exp(-times)+np.exp(times)*np.sin(2*times),))

# Plot the solutions

plt.figure(figsize=(10, 5))

plt.plot(times, solutions_manual[0, :], label='x1(t)')

plt.plot(times, solutions_manual[1, :], label='x2(t)')

plt.plot(times, solutions_manual[2, :], label='x3(t)')

plt.xlabel('Time t')

plt.ylabel('Solution x(t)')

plt.title('Manual Solutions of the ODE')

plt.legend()

plt.grid()

plt.show()