1 Reading ¶ Material related to this page, as well as additional exercises, can be found in ALA 8.1.

2 Learning Objectives ¶ By the end of this page, you should know:

how to recognize linear dynamical systems, how to find the solution to a linear ordinary differential equation. 3 Linear Dynamical Systems ¶ A dynamical system refers to the differential equations governing the change of a system over time. This system can be mechanical, electrical, fluid, biological, financial, or even social. In this page, we will learn how to solve special differential equations describing linear dynamical systems . We will draw heavily on the concepts of eigenvalues and eigenvectors covered in the previous pages.

3.1 Scalar Ordinary Differential Equations ¶ Let’s remind ourselves of the solution to first order scalar ordinary differential equations (ODEs), which take the form

d u d t = a u , \begin{align*}

\frac{du}{dt} = au,

\end{align*} d t d u = a u , where a ∈ R a\in \mathbb R a ∈ R u ( t ) u(t) u ( t )

Note that you will sometimes see u ˙ \dot u u ˙ d u d t \frac{du}{dt} d t d u (1)

d d t u ( t ) = a u ( t ) , \begin{align*}

\frac{d}{dt} u(t) = au(t),

\end{align*} d t d u ( t ) = a u ( t ) , however, the argument t t t u ( t ) u(t) u ( t )

The general solution to (1)

u ( t ) = c e a t , \begin{align*}

u(t) = ce^{at}, \tag{SOL}

\end{align*} u ( t ) = c e a t , ( SOL ) where the constant c ∈ R c\in\mathbb R c ∈ R u ( t 0 ) = b u(t_0) = b u ( t 0 ) = b t 0 = 0 t_0 = 0 t 0 = 0 t = t 0 t= t_0 t = t 0 (SOL)

u ( t 0 ) = c e a t 0 = b \begin{align*}

u(t_0) = ce^{at_0} = b

\end{align*} u ( t 0 ) = c e a t 0 = b so that c = b e − a t 0 c = be^{-at_0} c = b e − a t 0

u ( t ) = b e a ( t − t 0 ) \begin{align*}

u(t) = be^{a(t - t_0)}

\end{align*} u ( t ) = b e a ( t − t 0 ) solves (1)

Example 1 (Half-life of an isotope)

The radioactivity decay of uranium-238 is governed by the differential equation

d u d t = − γ u \begin{align*}

\frac{du}{dt} = -\gamma u

\end{align*} d t d u = − γ u where c = u ( 0 ) c = u(0) c = u ( 0 ) t 0 = 0 t_0 = 0 t 0 = 0 u ( t ) u(t) u ( t )

An isotope’s half-life t ∗ t_* t ∗ u ( t ∗ ) = 1 2 u ( 0 ) u(t_*) = \frac 1 2 u(0) u ( t ∗ ) = 2 1 u ( 0 ) t ∗ t_* t ∗

u ( t ∗ ) = u ( 0 ) e − γ t ∗ = 1 2 u ( 0 ) ⟺ e − γ t ∗ = 1 2 ⟺ t ∗ = log 2 γ \begin{align*}

&u(t_*) = u(0) e^{-\gamma t_*} = \frac 1 2 u(0)\\

&\iff e^{-\gamma t_*} = \frac 1 2 \iff t_* = \frac{\log 2}{\gamma}

\end{align*} u ( t ∗ ) = u ( 0 ) e − γ t ∗ = 2 1 u ( 0 ) ⟺ e − γ t ∗ = 2 1 ⟺ t ∗ = γ log 2 Before proceeding to the general case, we make some simple but useful observations:

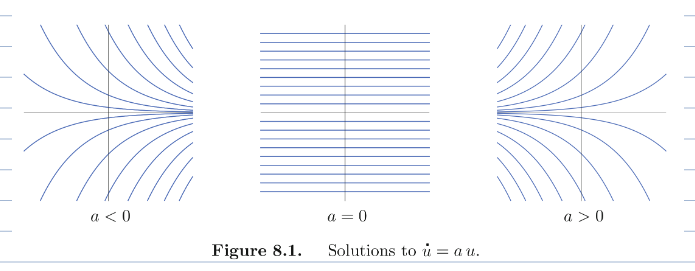

The zero function u ( t ) = 0 u(t) = 0 u ( t ) = 0 t t t c = 0 c=0 c = 0 equilibrium of fixed point solution .

If a > 0 a > 0 a > 0 u = 0 u = 0 u = 0 unstable equilibrium , beacuse any small nonzero initial condition u ( t 0 ) = ϵ u(t_0) = \epsilon u ( t 0 ) = ϵ u = 0 u=0 u = 0

If a < 0 a < 0 a < 0 u = 0 u = 0 u = 0 stable equilibrium (in fact globally asymptotically so), which means that u ( t ) → 0 u(t) \to 0 u ( t ) → 0 t → ∞ t\to \infty t → ∞ u ( t 0 ) u(t_0) u ( t 0 )

The borderline case is a = 0 a = 0 a = 0 u ( t ) = u ( t 0 ) u(t) = u(t_0) u ( t ) = u ( t 0 ) t t t marginally stable (or just stable) because while they don’t blow up on you, they also don’t converge to u = 0 u = 0 u = 0

3.2 First Order Dynamical Systems ¶ We will concerntrate most of our attention on homogenous linear time-invariant first order dynamical systems .

Definition 1 (Homogeneous linear time-invariant first order dynamical system)

For homogeneous linear time-invariant first order dynamical systems , we have a vector-valued solution

u ( t ) = [ u 1 ( t ) u 2 ( t ) ⋮ u n ( t ) ] \begin{align*}

\vv{u}(t) = \bm u_1(t)\\u_2(t) \\ \vdots \\ u_n(t) \em

\end{align*} u ( t ) = ⎣ ⎡ u 1 ( t ) u 2 ( t ) ⋮ u n ( t ) ⎦ ⎤ which parameterizes a curve in R n \mathbb{R}^n R n u ( t ) \vv{u}(t) u ( t )

d u 1 d t = a 11 u 1 + a 12 u 2 + ⋯ + a 1 n u n d u 2 d t = a 21 u 1 + a 22 u 2 + ⋯ + a 2 n u n … d u n d t = a n 1 u 1 + a n 2 u 2 + ⋯ + a n n u n \begin{align*}

\frac{du_1}{d_t} &= a_{11}u_1 + a_{12}u_2 + \dots + a_{1n}u_n\\

\frac{du_2}{d_t} &= a_{21}u_1 + a_{22}u_2 + \dots + a_{2n}u_n\\

&\dots\\

\frac{du_n}{d_t} &= a_{n1}u_1 + a_{n2}u_2 + \dots + a_{nn}u_n\\

\end{align*} d t d u 1 d t d u 2 d t d u n = a 11 u 1 + a 12 u 2 + ⋯ + a 1 n u n = a 21 u 1 + a 22 u 2 + ⋯ + a 2 n u n … = a n 1 u 1 + a n 2 u 2 + ⋯ + a nn u n or more compactly,

d u d t = A u \begin{align*}

\frac{d\vv u}{dt} = A\vv u\tag{LTI}

\end{align*} d t d u = A u ( LTI ) for A ∈ R n × n A\in \mathbb{R}^{n\times n} A ∈ R n × n

The question now becomes: what are the solutions to (LTI) (SOL)

u ( t ) = e λ t v \begin{align*}

\vv u(t) = e^{\lambda t} \vv v \tag{GUESS}

\end{align*} u ( t ) = e λ t v ( GUESS ) is a solution to (LTI) λ ∈ R \lambda \in \mathbb R λ ∈ R v ∈ R n \vv v\in \mathbb{R}^n v ∈ R n u i ( t ) = e λ t v i u_i(t) = e^{\lambda t} v_i u i ( t ) = e λ t v i (GUESS) same exponential function.

Let’s start by computing the time derivatives of both sides of (GUESS)

u ( t ) = e λ t v for all t ⟺ d d t u ( t ) = d d t e λ t v for all t ⟺ A u = λ e λ t v for all t (by (LTI)) ⟺ A e λ t v = λ e λ t v for all t (by (GUESS)) ⟺ A v = λ v for all t (since e λ t is a positive scalar) \begin{align*}

\vv u(t) = e^{\lambda t} \vv v \quad\text{for all $t$} &\iff \frac{d}{dt} \vv u(t) = \frac{d}{dt} e^{\lambda t} \vv v \quad\text{for all $t$} \\

&\iff A\vv u = \lambda e^{\lambda t} \vv v \quad\text{for all $t$} \qquad\text{(by (LTI))}\\

&\iff Ae^{\lambda t} \vv v = \lambda e^{\lambda t} \vv v \quad\text{for all $t$} \qquad\text{(by (GUESS))}\\

&\iff A \vv v = \lambda \vv v \quad\text{for all $t$} \qquad\text{(since $e^{\lambda t}$ is a positive scalar)}

\end{align*} u ( t ) = e λ t v for all t ⟺ d t d u ( t ) = d t d e λ t v for all t ⟺ A u = λ e λ t v for all t (by (LTI)) ⟺ A e λ t v = λ e λ t v for all t (by (GUESS)) ⟺ A v = λ v for all t (since e λ t is a positive scalar) Therefore (GUESS) (LTI) λ v = A v \lambda \vv v = A \vv v λ v = A v eigenvalue/eigenvector

4 Eigenvalues and Eigenvectors in Linear ODEs ¶ A key obesrvation is that we can reduce solving (LTI) n n n A A A diagonalizable V = [ v 1 v 2 … v n ] V = \bm \vv{v_1} & \vv{v_2} & \dots & \vv{v_n}\em V = [ v 1 v 2 … v n ] n × n n\times n n × n A A A D = diag ( λ 1 , … , λ n ) D = \text{diag}(\lambda_1, \dots, \lambda_n) D = diag ( λ 1 , … , λ n ) A = V D V − 1 A = VDV^{-1} A = V D V − 1

d d t u = V D V − 1 u \begin{align*}

\frac{d}{dt} \vv u = VDV^{-1} \vv u \tag{S1}

\end{align*} d t d u = V D V − 1 u ( S1 ) Rewriting this linear transformation in the eigenbasis given by V V V V y = u V \vv y = \vv u V y = u y = V − 1 u \vv y = V^{-1}\vv u y = V − 1 u d u d t = V d y d t \frac{d \vv u}{dt} = V\frac{d\vv y}{dt} d t d u = V d t d y (S1)

V d y d t = V D y \begin{align*}

V \frac{d\vv y}{d t} = VD\vv y\tag{S2}

\end{align*} V d t d y = V D y ( S2 ) Since V V V (S2)

d y d t = D y \begin{align*}

\frac{d\vv y}{dt} = D\vv y \tag{S3}

\end{align*} d t d y = D y ( S3 ) Thus by working in the coordinate system defined by the eigenbasis V V V (LTI) n n n (S3)

d y i d t = λ i y i \begin{align*}

\frac{d y_i}{dt} = \lambda_i y_i \tag{Si}

\end{align*} d t d y i = λ i y i ( Si ) The general solution to (Si) y i ( t ) = c i e λ i ( t − t 0 ) y_i(t) = c_ie^{\lambda_i(t - t_0)} y i ( t ) = c i e λ i ( t − t 0 ) c i = y i ( c 0 ) c_i = y_i(c_0) c i = y i ( c 0 ) y i ( t 0 ) y_i(t_0) y i ( t 0 ) y ( t 0 ) = V − 1 u ( t 0 ) \vv y (t_0) = V^{-1} \vv u (t_0) y ( t 0 ) = V − 1 u ( t 0 )

Now, given the solution y ( t ) = ( y 1 ( t ) , … , y n ( t ) ) \vv y(t) = (y_1(t), \dots, y_n(t)) y ( t ) = ( y 1 ( t ) , … , y n ( t )) V V V

u ( t ) = V y ( t ) = c 1 e λ 1 ( t − t 0 ) v 1 + c 2 e λ 2 ( t − t 0 ) v 2 + ⋯ + c n e λ n ( t − t 0 ) v n \begin{align*}

\vv u(t) &= V \vv y(t) \\

&= c_1 e^{\lambda_1 (t - t_0)} \vv{v_1} + c_2 e^{\lambda_2 (t - t_0)} \vv{v_2} + \dots + c_ne^{\lambda_n (t - t_0)} \vv{v_n} \tag{SOL}

\end{align*} u ( t ) = V y ( t ) = c 1 e λ 1 ( t − t 0 ) v 1 + c 2 e λ 2 ( t − t 0 ) v 2 + ⋯ + c n e λ n ( t − t 0 ) v n ( SOL ) where we remember that c = V − 1 u ( t 0 ) \vv c = V^{-1} u(t_0) c = V − 1 u ( t 0 )

4.1 Solving Linear ODEs when A A A ¶ In the previous section, we just showed something incredibly powerful, which we summarize in the following theorem.

Theorem 1 (The solution to a homogeneous linear time-invariant first order dynamical system with diagonalizable

A A A )

Let d d t u = A u \frac{d}{dt}\vv u = A\vv u d t d u = A u A A A λ 1 , λ 2 , … , λ n \lambda_1, \lambda_2, \dots, \lambda_n λ 1 , λ 2 , … , λ n A A A v 1 , v 2 , … , v n \vv{v_1}, \vv{v_2}, \dots, \vv{v_n} v 1 , v 2 , … , v n

Then, any solution to this linear ODE is a linear combination of the functions

{ e λ 1 ( t − t 0 ) v 1 , e λ 2 ( t − t 0 ) v 2 , … , e λ n ( t − t 0 ) v n } \begin{align*}

\{ e^{\lambda_1 (t - t_0)}\vv{v_1}, e^{\lambda_2 (t - t_0)}\vv{v_2}, \dots , e^{\lambda_n(t - t_0)}\vv{v_n} \}

\end{align*} { e λ 1 ( t − t 0 ) v 1 , e λ 2 ( t − t 0 ) v 2 , … , e λ n ( t − t 0 ) v n } i.e., these form a basis (LTI) c = V − 1 u ( t 0 ) \vv{c} = V^{-1}u(t_0) c = V − 1 u ( t 0 )

Example 2 (Solving a linear ODE with

2 × 2 2\times 2 2 × 2 coefficient matrix)

Consider d d t u = A u \frac{d}{dt}\vv u = A\vv u d t d u = A u A = [ 1 − 1 2 4 ] A = \bm 1&-1\\2&4\em A = [ 1 2 − 1 4 ] A A A

λ 1 = 2 , v 1 = [ 1 − 1 ] and λ 2 = 3 , v 2 = [ 1 − 2 ] , \begin{align*}

\lambda_1 = 2, \vv{v_1} = \bm 1\\ -1\em \quad\text{and} \quad \lambda_2 = 3, \vv{v_2} = \bm 1\\-2\em,

\end{align*} λ 1 = 2 , v 1 = [ 1 − 1 ] and λ 2 = 3 , v 2 = [ 1 − 2 ] , and therefore solutions u ( t ) \vv u(t) u ( t )

u ( t ) = c 1 e λ 1 ( t − t 0 ) v 1 + c 2 e λ 2 ( t − t 0 ) v 2 = c 1 e 2 ( t − t 0 ) [ 1 − 1 ] + c 2 e 3 ( t − t 0 ) [ 1 − 2 ] . \begin{align*}

\vv u(t) = c_1 e^{\lambda_1 (t - t_0)} \vv{v_1} + c_2 e^{\lambda_2 (t - t_0)}\vv{v_2} = c_1e^{2(t - t_0)}\bm 1\\-1\em + c_2 e^{3(t - t_0)}\bm 1\\-2\em.

\end{align*} u ( t ) = c 1 e λ 1 ( t − t 0 ) v 1 + c 2 e λ 2 ( t − t 0 ) v 2 = c 1 e 2 ( t − t 0 ) [ 1 − 1 ] + c 2 e 3 ( t − t 0 ) [ 1 − 2 ] . Suppose we have the requirement that u ( t 0 ) = [ 3 4 ] \vv u(t_0) = \bm 3\\4\em u ( t 0 ) = [ 3 4 ]

u ( t 0 ) = c 1 [ 1 − 1 ] + c 2 [ 1 − 2 ] = [ 1 1 − 1 − 2 ] [ c 1 c 2 ] = [ 3 4 ] \begin{align*}

\vv u(t_0) = c_1 \bm 1\\-1\em + c_2 \bm 1\\-2\em = \bm 1&1\\-1-2\em \bm c_1\\c_2\em = \bm 3\\4 \em

\end{align*} u ( t 0 ) = c 1 [ 1 − 1 ] + c 2 [ 1 − 2 ] = [ 1 − 1 − 2 1 ] [ c 1 c 2 ] = [ 3 4 ] for [ c 1 c 2 ] = [ 10 − 7 ] \bm c_1\\c_2\em = \bm 10 \\-7\em [ c 1 c 2 ] = [ 10 − 7 ]

u ( t ) = 10 e 2 ( t − t 0 ) [ 1 − 1 ] − 7 e 3 ( t − t 0 ) [ 1 − 2 ] . \begin{align*}

\vv u(t) = 10e^{2(t - t_0)}\bm 1\\-1\em - 7e^{3(t - t_0)}\bm 1\\-2\em.

\end{align*} u ( t ) = 10 e 2 ( t − t 0 ) [ 1 − 1 ] − 7 e 3 ( t − t 0 ) [ 1 − 2 ] . (SOL) u ( t ) \vv u(t) u ( t ) v i \vv{v_i} v i λ i < 0 \lambda_i < 0 λ i < 0 λ i > 0 \lambda_i > 0 λ i > 0 λ i = 0 \lambda_i = 0 λ i = 0

We have assumed real eigenvalues and eigenvectors throughtout. It turns out our analysis holds true even when eigenvalues/vectors are complex; however, interpreting the results as solutions requires a little more care (we’ll address this next class).

(SOL)