3.2 Angles, the Cauchy–Schwarz Inequality, and General Norms Computing angles between general vectors

Dept. of Electrical and Systems Engineering

University of Pennsylvania

1 Reading ¶ Material related to this page, as well as additional exercises, can be found in ALA Ch. 3.2.

2 Learning Objectives ¶ By the end of this page, you should know:

the Cauchy-Schwarz Inequality the generalized angle between vectors orthogonality between vectors the triangle inequality definition and examples of norms 3 Generalized Angle ¶ Our starting point in defining the notion of angle in a general inner product space is the familiar formula

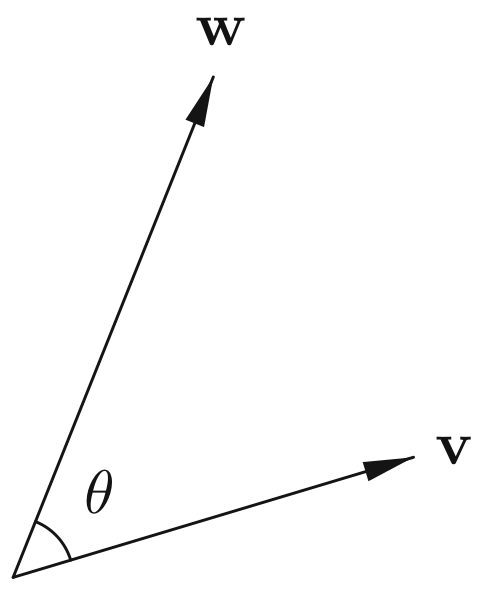

v ⋅ w = ∥ v ∥ ∥ w ∥ cos ( θ ) , \vv v \cdot \vv w = \|\vv v\| \|\vv w\| \cos(\theta), v ⋅ w = ∥ v ∥∥ w ∥ cos ( θ ) , where θ measures the angle between v \vv v v w \vv w w

Since ∥ cos ( θ ) ∥ ≤ 1 \|\cos(\theta)\| \leq 1 ∥ cos ( θ ) ∥ ≤ 1 v ⋅ w \vv v \cdot \vv w v ⋅ w

∣ v ⋅ w ∣ ≤ ∥ v ∥ ∥ w ∥ . |\vv v \cdot \vv w| \leq \|\vv v\|\|\vv w\|. ∣ v ⋅ w ∣ ≤ ∥ v ∥∥ w ∥. Theorem 1 (Cauchy-Schwarz Inequality)

The simplest form of Cauchy-Schwarz Inequality is (2)

∣ ⟨ v , w ⟩ ∣ ≤ ∥ v ∥ ∥ w ∥ for all v , w ∈ V . |\langle \vv v , \vv w \rangle| \leq \|\vv v\| \|\vv w\| \ \textrm{for all} \ \vv v, \vv w \in V. ∣ ⟨ v , w ⟩ ∣ ≤ ∥ v ∥∥ w ∥ for all v , w ∈ V . Here, ∥ v ∥ = ⟨ v , v ⟩ \|\vv v\| = \sqrt{\langle \vv v, \vv v\rangle} ∥ v ∥ = ⟨ v , v ⟩ ∣ ⋅ ∣ |\cdot| ∣ ⋅ ∣

Definition 1 (3)

− 1 ≤ ⟨ v , w ⟩ ∥ v ∥ ∥ w ∥ ≤ 1. -1 \leq \frac{\langle \vv v, \vv w \rangle}{\|\vv v\|\|\vv w\|} \leq 1. − 1 ≤ ∥ v ∥∥ w ∥ ⟨ v , w ⟩ ≤ 1. Hence, θ is well defined, and unique if restricted to be in [ 0 , π ] [0, \pi] [ 0 , π ]

4 Angles between Generic Vectors ¶ The vectors v = [ 1 0 1 ] \vv v = \bm 1 \\ 0 \\ 1\em v = ⎣ ⎡ 1 0 1 ⎦ ⎤ w = [ 0 1 1 ] \vv w = \bm 0 \\ 1 \\ 1\em w = ⎣ ⎡ 0 1 1 ⎦ ⎤ v . w = 1 \vv v . \vv w = 1 v . w = 1 ∥ v ∥ = ∥ w ∥ = 2 \|\vv v\| = \|\vv w\| = \sqrt{2} ∥ v ∥ = ∥ w ∥ = 2

cos ( θ ) = 1 2 2 = 1 2 ⇒ θ = arccos ( 1 2 ) = π 3 rad ,

\cos(\theta) = \frac{1}{\sqrt{2}\sqrt{2}} = \frac{1}{2} \Rightarrow \theta = \arccos\left(\frac{1}{2}\right) = \frac{\pi}{3} \ \textrm{rad}, cos ( θ ) = 2 2 1 = 2 1 ⇒ θ = arccos ( 2 1 ) = 3 π rad , which is the usual notion of angle.

We can also compute the angle between v \vv v v w \vv w w ⟨ v , w ⟩ = v 1 w 1 + 2 v 2 w 2 + 3 v 3 w 3 \langle \vv v, \vv w \rangle = v_1w_1 + 2v_2w_2 + 3v_3w_3 ⟨ v , w ⟩ = v 1 w 1 + 2 v 2 w 2 + 3 v 3 w 3 ⟨ v , w ⟩ = 3 , ∥ v ∥ = 2 , ∥ w ∥ = 5 \langle \vv v, \vv w \rangle = 3, \| \vv v\| = 2, \|\vv w\| = \sqrt{5} ⟨ v , w ⟩ = 3 , ∥ v ∥ = 2 , ∥ w ∥ = 5

cos ( θ ) = 3 2 5 = 0.67082 ⇒ θ = arccos ( 0.67082 ) = 0.83548 rad .

\cos(\theta) = \frac{3}{2\sqrt{5}} = 0.67082 \Rightarrow \theta = \arccos\left(0.67082\right) = 0.83548 \ \textrm{rad}. cos ( θ ) = 2 5 3 = 0.67082 ⇒ θ = arccos ( 0.67082 ) = 0.83548 rad . We can also define angles between vectors in a generic vector space, for example, polynomials. For p ( x ) = a 0 + a 1 x + a 2 x 2 , q ( x ) = b 0 + b 1 x + b 2 x 2 ∈ P ( 2 ) {p(x) = a_0 + a_1 x +a_2x^2, q(x) = b_0 + b_1x + b_2x^2 \in P^{(2)}} p ( x ) = a 0 + a 1 x + a 2 x 2 , q ( x ) = b 0 + b 1 x + b 2 x 2 ∈ P ( 2 ) ⟨ p , q ⟩ = a 0 b 0 + a 1 b 1 + a 2 b 2 \langle p, q\rangle = a_0b_0 + a_1b_1 + a_2b_2 ⟨ p , q ⟩ = a 0 b 0 + a 1 b 1 + a 2 b 2 dot product p = [ a 0 a 1 a 2 ] , q = [ b 0 b 1 b 2 ] \vv p = \bm a_0 \\ a_1 \\ a_2\em, \vv q = \bm b_0 \\ b_1 \\ b_2\em p = ⎣ ⎡ a 0 a 1 a 2 ⎦ ⎤ , q = ⎣ ⎡ b 0 b 1 b 2 ⎦ ⎤ this definition p ( x ) p(x) p ( x ) q ( x ) q(x) q ( x )

cos ( θ ) = ⟨ p , q ⟩ ∥ p ∥ ∥ q ∥ = ⟨ p , q ⟩ ∥ p ∥ ∥ q ∥ .

\cos(\theta) = \frac{\langle p, q \rangle}{\|p\| \|q\|} = \frac{\langle \vv p, \vv q \rangle}{\|\vv p\| \|\vv q\|}. cos ( θ ) = ∥ p ∥∥ q ∥ ⟨ p , q ⟩ = ∥ p ∥∥ q ∥ ⟨ p , q ⟩ . For example, if p ( x ) = 1 + x 2 p(x) = 1 + x^2 p ( x ) = 1 + x 2 q ( x ) = x + x 2 q(x) = x + x^2 q ( x ) = x + x 2 ⟨ p , q ⟩ = 1 \langle p, q \rangle = 1 ⟨ p , q ⟩ = 1 ∥ p ∥ = ∥ q ∥ = 2 \| p \| = \| q \| = \sqrt{2} ∥ p ∥ = ∥ q ∥ = 2 cos ( θ ) = 1 2 ⇒ θ = π 3 {\cos(\theta) = \frac{1}{2} \Rightarrow \theta = \frac{\pi}{3}} cos ( θ ) = 2 1 ⇒ θ = 3 π

Python break! ¶ We show how to use NumPy functions (np.dot, np.linalg.norm, np.arcos) to compute the angle between vectors. We also show how to compute cosine similarity from the cosine distance between vectors using scipy.spatial library.

# angle between vectors

import numpy as np

import scipy

v = np.array([1, 0, 1])

w = np.array([0, 1, 1])

cos_theta = np.dot(v, w)/(np.linalg.norm(v)*np.linalg.norm(w))

theta = np.arccos(cos_theta)

print("Angle between v and w is: ", theta, " rad")

# cosine distance -> cosine similarity

from scipy.spatial import distance

cosine_dist = distance.cosine(v, w)

cosine_sim = 1 - cosine_dist

print("Cosine similarity between v and w is: ", cosine_sim)Angle between v and w is: 1.0471975511965979 rad

Cosine similarity between v and w is: 0.5

5 Orthogonal Vectors ¶ The notion of perpendicular vectors is an important one in Euclidean geometry. These are vectors that meet at a right angle, i.e., θ = π 2 \theta = \frac{\pi}{2} θ = 2 π θ = − π 2 \theta = -\frac{\pi}{2} θ = − 2 π cos θ = 0 \cos \theta = 0 cos θ = 0 v \vv v v w \vv w w v ⋅ w = 0 \vv v \cdot \vv w = 0 v ⋅ w = 0 Cauchy-Schwarz

We continue with our strategy of extending familiar geometric concepts in Euclidean space to general inner product spaces. For historic reasons, we use the term orthogonal instead of perpendicular.

Definition 2 (Orthogonal)

Two elements v , w ∈ V \vv v, \vv w \in V v , w ∈ V orthogonal with respect to ⟨ ⋅ , ⋅ ⟩ \langle \cdot, \cdot\rangle ⟨ ⋅ , ⋅ ⟩ ⟨ v , w ⟩ = 0 \langle \vv v, \vv w \rangle = 0 ⟨ v , w ⟩ = 0

Orthogonality is an incredibly useful and practical idea that appears all over the place in engineering, AI, and economics, which we will explore in detail next lecture.

The vectors v = [ 1 2 ] \vv v = \bm 1 \\ 2\em v = [ 1 2 ] w = [ 6 − 3 ] \vv w = \bm 6 \\ -3\em w = [ 6 − 3 ] v ⋅ w = 1 ⋅ 6 + 2 ⋅ − 3 = 0 \vv v \cdot \vv w = 1 \cdot 6 + 2 \cdot -3 = 0 v ⋅ w = 1 ⋅ 6 + 2 ⋅ − 3 = 0

v \vv v v w \vv w w not orthogonal with respect to the weighted inner product ⟨ v , w ⟩ = v 1 w 1 + 2 v 2 w 2 \langle \vv v, \vv w \rangle = v_1w_1 + 2v_2w_2 ⟨ v , w ⟩ = v 1 w 1 + 2 v 2 w 2

⟨ v , w ⟩ = ⟨ [ 1 2 ] , [ 6 − 3 ] ⟩ = 1 ( 1 ⋅ 6 ) + 2 ( 2 ⋅ − 3 ) = 6 − 12 = − 6 ≠ 0.

\langle \vv v, \vv w \rangle = \left\langle \bm 1 \\ 2\em, \bm 6 \\ -3\em\right\rangle = 1(1 \cdot 6) + 2(2 \cdot -3) = 6 - 12 = -6 \neq 0. ⟨ v , w ⟩ = ⟨ [ 1 2 ] , [ 6 − 3 ] ⟩ = 1 ( 1 ⋅ 6 ) + 2 ( 2 ⋅ − 3 ) = 6 − 12 = − 6 = 0. The polynomials f ( x ) = x f(x) = x f ( x ) = x g ( x ) = 1 + x 2 g(x) = 1 + x^2 g ( x ) = 1 + x 2 P ( 2 ) P^{(2)} P ( 2 ) ⟨ p , q ⟩ = a 0 b 0 + a 1 b 1 + a 2 b 2 \langle p, q\rangle = a_0b_0 + a_1b_1 + a_2b_2 ⟨ p , q ⟩ = a 0 b 0 + a 1 b 1 + a 2 b 2 a 0 = 0 , a 1 = 1 , a 2 = 0 a_0 = 0, a_1 = 1, a_2 = 0 a 0 = 0 , a 1 = 1 , a 2 = 0 b 0 = 1 , b 1 = 0 , b 2 = 1 b_0 = 1, b_1 = 0, b_2 = 1 b 0 = 1 , b 1 = 0 , b 2 = 1 ⟨ f , g ⟩ = 0 ⋅ 1 + 1 ⋅ 0 + 0 ⋅ 1 = 0 \langle f, g\rangle = 0\cdot 1 + 1 \cdot 0 + 0 \cdot 1 = 0 ⟨ f , g ⟩ = 0 ⋅ 1 + 1 ⋅ 0 + 0 ⋅ 1 = 0

However, f f f g g g ⟨ p , q ⟩ = ∫ 0 1 p ( x ) q ( x ) d x \langle p, q \rangle = \int_0^1 p(x)q(x) dx ⟨ p , q ⟩ = ∫ 0 1 p ( x ) q ( x ) d x C 0 [ 0 , 1 ] C^{0}[0, 1] C 0 [ 0 , 1 ]

⟨ f , g ⟩ = ∫ 0 1 x ( 1 + x 2 ) d x = ∫ 0 1 ( x + x 3 ) d x = x 2 2 + x 4 4 ∣ 0 1 = 1 2 + 1 4 = 3 4 ≠ 0.

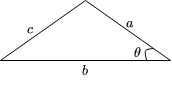

\langle f, g \rangle = \int_0^1 x(1 + x^2) dx = \int_0^1 (x + x^3) dx = \frac{x^2}{2} + \frac{x^4}{4} \bigg|_{0}^1 = \frac{1}{2} + \frac{1}{4} = \frac{3}{4} \neq 0. ⟨ f , g ⟩ = ∫ 0 1 x ( 1 + x 2 ) d x = ∫ 0 1 ( x + x 3 ) d x = 2 x 2 + 4 x 4 ∣ ∣ 0 1 = 2 1 + 4 1 = 4 3 = 0. 6 The Triangle Inequality ¶ We know, e.g., from the law of cosines, that the length of one side of a triangle is at most the sum of the length of the other two sides.

c 2 = a 2 + b 2 − 2 a b cos ( θ ) ≤ a 2 + b 2 + 2 a b ( since cos ( θ ) ≤ 1 ) = ( a + b ) 2 ⇒ c ≤ a + b \begin{align*}

c^2 &= a^2 + b^2 - 2ab\cos(\theta) \\

&\leq a^2 + b^2 + 2ab \ (\textrm{since} \ \cos(\theta) \leq 1) \\

&= (a+b)^2 \\

\Rightarrow c &\leq a+b

\end{align*} c 2 ⇒ c = a 2 + b 2 − 2 ab cos ( θ ) ≤ a 2 + b 2 + 2 ab ( since cos ( θ ) ≤ 1 ) = ( a + b ) 2 ≤ a + b The idea in (11) ∥ v + w ∥ \|\vv v + \vv w\| ∥ v + w ∥ v \vv v v w \vv w w ∥ v ∥ \|\vv v\| ∥ v ∥ ∥ w ∥ \| \vv w\| ∥ w ∥

7 Norms ¶ We have seen that inner products allow us to define a natural notion of length. However, there are other sensible ways of measuring the size of a vector that do not arise from an inner product. For example suppose we choose to measure the size of a vector by its ``taxicab distance’’ where we pretend we are a cab driver in Manhattan, and we can only drive go north-south and east-west. We then end up with a different measure of length that makes lots of sense!

Consider the vector v = [ 1 − 1 ] \vv v = \bm 1 \\ -1\em v = [ 1 − 1 ] ∥ v ∥ = 1 2 + ( − 1 ) 2 = 2 \|\vv v\| = \sqrt{1^2 + (-1)^2} = \sqrt{2} ∥ v ∥ = 1 2 + ( − 1 ) 2 = 2 ∥ v ∥ 1 \|\vv v\|_1 ∥ v ∥ 1

∥ v ∥ 1 = ∥ 1 ∥ (drive 1 unit east) + ∥ − 1 ∥ (drive 1 unit south) = 2.

\|\vv v\|_1 = \|1\| \ \textrm{(drive 1 unit east)} \ + \|-1\| \ \textrm{(drive 1 unit south)} = 2. ∥ v ∥ 1 = ∥1∥ (drive 1 unit east) + ∥ − 1∥ (drive 1 unit south) = 2. These are different!

To define a general norm on a vector space, we will extract properties that ``make sense’’ as a measure of distance but that do not directly rely on an inner product structure (like angles).

A norm on a vector space V V V ∥ v ∥ \|\vv v\| ∥ v ∥ v ∈ V \vv v \in V v ∈ V v , w ∈ V \vv v, \vv w \in V v , w ∈ V c ∈ R c \in \mathbb{R} c ∈ R

Positivity : ∥ v ∥ ≥ 0 , \|\vv v\| \geq 0, ∥ v ∥ ≥ 0 , ∥ v ∥ = 0 \|\vv v\| = 0 ∥ v ∥ = 0 v = 0 \vv v = \vv 0 v = 0 Homogeneity : ∥ c v ∥ = ∣ c ∣ ∥ v ∥ \|c \vv v\| = |c| \|\vv v\| ∥ c v ∥ = ∣ c ∣∥ v ∥ Triangle inequality : ∥ v + w ∥ ≤ ∥ v ∥ + ∥ w ∥ \|\vv v + \vv w\| \leq \|\vv v\| + \|\vv w\| ∥ v + w ∥ ≤ ∥ v ∥ + ∥ w ∥ Axiom (i) says ``length’’ should always be non-negative, and only the zero vector has zero length (seems reasonable!)

Axiom (ii) says if I stretch/shrink a vector v \vv v v c ∈ R c \in \mathbb{R} c ∈ R scale accordingly (this is why we call c ∈ R c \in \mathbb{R} c ∈ R scalar !). Note that c < 0 c<0 c < 0 and flip v \mathbf{v} v ∥ c v ∥ = ∥ − c v ∥ = ∣ c ∣ ∥ v ∥ \|c\vv v\| = \|-c\vv v\| = |c|\|\mathbf{v}\| ∥ c v ∥ = ∥ − c v ∥ = ∣ c ∣∥ v ∥

Axiom (iii) tells us that lengths of sums of vectors should ``behave as if there is a cosine rule’’ even if there is no notion of angle. This is a less intuitive property but has been identified as a key property to make norms useful to work with.

We will introduce two other commonly used norms in practice, but you should know that there are many many more.

The 1-norm of a vector v = [ v 1 v 2 ⋮ v n ] ∈ R n \vv v = \bm v_1 \\ v_2 \\ \vdots \\ v_n\em \in \mathbb{R}^n v = ⎣ ⎡ v 1 v 2 ⋮ v n ⎦ ⎤ ∈ R n

∥ v ∥ 1 = ∣ v 1 ∣ + ∣ v 2 ∣ + … + ∣ v n ∣

\|\vv v\|_1 = |v_1| + |v_2| + \ldots + |v_n| ∥ v ∥ 1 = ∣ v 1 ∣ + ∣ v 2 ∣ + … + ∣ v n ∣ which we recognize as our taxi cab distance

The ∞ − \infty- ∞ − norm or max-norm is given by the maximal entry in absolute value:

∥ v ∥ ∞ = max { ∣ v 1 ∣ , ∣ v 2 ∣ , … , ∣ v n ∣ } .

\|\vv v\|_{\infty} = \max\{|v_1|, |v_2|, \ldots, |v_n|\}. ∥ v ∥ ∞ = max { ∣ v 1 ∣ , ∣ v 2 ∣ , … , ∣ v n ∣ } . Checking the axioms of Definition 3 ∣ a + b ∣ ≤ ∣ a ∣ + ∣ b ∣ |a + b| \leq |a| + |b| ∣ a + b ∣ ≤ ∣ a ∣ + ∣ b ∣ a , b ∈ R a, b \in \mathbb{R} a , b ∈ R

The 1-norm , ∞ − \infty- ∞ − norm and Eucledian norm (also called the 2-norm ) are examples of the general p − p- p − norm :

∥ v ∥ p = ( ∑ i = 1 n ∣ v i ∣ p ) 1 p ( p-norm )

\|\vv v\|_p = \left(\sum_{i=1}^n|v_i|^p\right)^{\frac{1}{p}} \ (\textrm{p-norm}) ∥ v ∥ p = ( i = 1 ∑ n ∣ v i ∣ p ) p 1 ( p-norm ) which can be shown to be a valid norm for 1 ≤ p < ∞ 1 \leq p < \infty 1 ≤ p < ∞ ∞ − \infty- ∞ − p − p- p − p → ∞ p \to \infty p → ∞

The hard part in showing p − p- p − axiom 3 Minkowski’s inequality .

# Different norms

v = np.array([1, -2])

v1 = np.linalg.norm(v, ord=1)

v2 = np.linalg.norm(v)

vinf = np.linalg.norm(v, ord=np.inf)

print("\n1-norm: ", v1, "\n2-norm: ", v2, "\ninfinity norm: ", vinf)

1-norm: 3.0

2-norm: 2.23606797749979

infinity norm: 2.0